Geoguidance: navigation without infrastructure.

Our Geoguidance navigation technology is based on a simple principle: learning and recognizing the uniqueness of a building.

Equipped with a laser scanner dedicated to navigation (LIDAR*), Driven by Balyo robotic trucks self-locate by recognizing fixtures inside the building: walls, racks, columns, machines, etc. The robot thus performs a 360° scan of its environment and correlates what it sees in real time with the reference map produced during installation.

*LIDAR = Light Imaging Detection And Ranging

How does the robot recognize its environment?

Environment learning is a vital stage in the installation of our robotic trucks. Our field engineers map the building in three stages:

![]() Stage 1

Stage 1

The field engineer moves the robot manually around the area(s) in which it is to work. During this first stage, the robot uses its laser navigation scanner to record all the data it receives, and transcribes them into a 2D map: walls, racks, trucks, pallets, machines, etc.

![]() Stage 2

Stage 2

As the objective is to obtain reference points for our robots, the field engineer “cleans up” this draft map, leaving only the fixtures, e.g. walls, racks and columns. This forms the reference map. We then add virtual routes and pick-up and placement points according to your logistics flows and requirements.

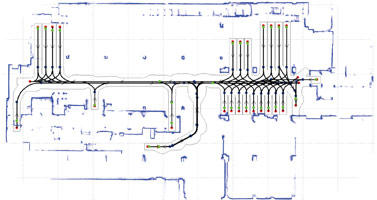

![]() Stage 3

Stage 3

The reference map is integrated into the robot’s processor, to compare what it sees in real time (via its laser scanner) with its knowledge (the reference map), so it can self-locate and move. The robots functions in the same way as the human brain when a person is moving.

How does the robot move?

Just like autonomous cars, we provide you with a driver. Balyo has recently developed a proprietary processor, which acts as the robot’s “brain”. Via its connection to all the mechanical components of the material handling truck and the sensors, it can take control. Like the human brain, the processor sends commands to the robot to move forward, stop, reverse, turn, lift or lower the forks and so on.

How does the robot know where it's going?

You decide. Our clients assign the robot’s destinations (pick-up and placement points) and missions themselves. For more information on how missions and the Robot Manager work, click here.

How is your system different from Natural Navigation or Vision Navigation?

![]() Natural Navigation

Natural Navigation

Some market players offer so-called “natural” navigation. This kind of navigation, also requiring no infrastructure, has existed for many years. Historically, this system has been limited for example to AGV applications in hospital service corridors, where the navigation environment is controlled and simple.

This technology searches for reference points (“feature recognition”) in the environment such as a specific door or a particular pillar, and correlates them with a database, a librar of these points. The limitations of this technology are well-known: the technology does not allow for operations in complex, regularly reconfigured environments, which BALYO does. Where BALYO correlates all the fixtures in real time, natural navigation searches for “virtual reflectors”. The result of this conceptual difference is the much higher level of robustness to change inherent in Driven by Balyo technology.

![]() Vision Navigation

Vision Navigation

Navigation by vision camera is a totally different concept. In this case, navigation is via images taken in real time by a video camera. This navigation is sensitive to light (unlike the LIDAR used by BALYO, the cameras do not emit light themselves). On a functional level, this technology is currently limited. It is not usually possible to pick-up or place pallets automatically.

What does this mean for you?

![]() No intrusive infrastructure

No intrusive infrastructure

![]() Quick Installation

Quick Installation

![]() Simplified circuit changes

Simplified circuit changes

![]() Easy to update and redeploy

Easy to update and redeploy

Discover how our robot navigate!